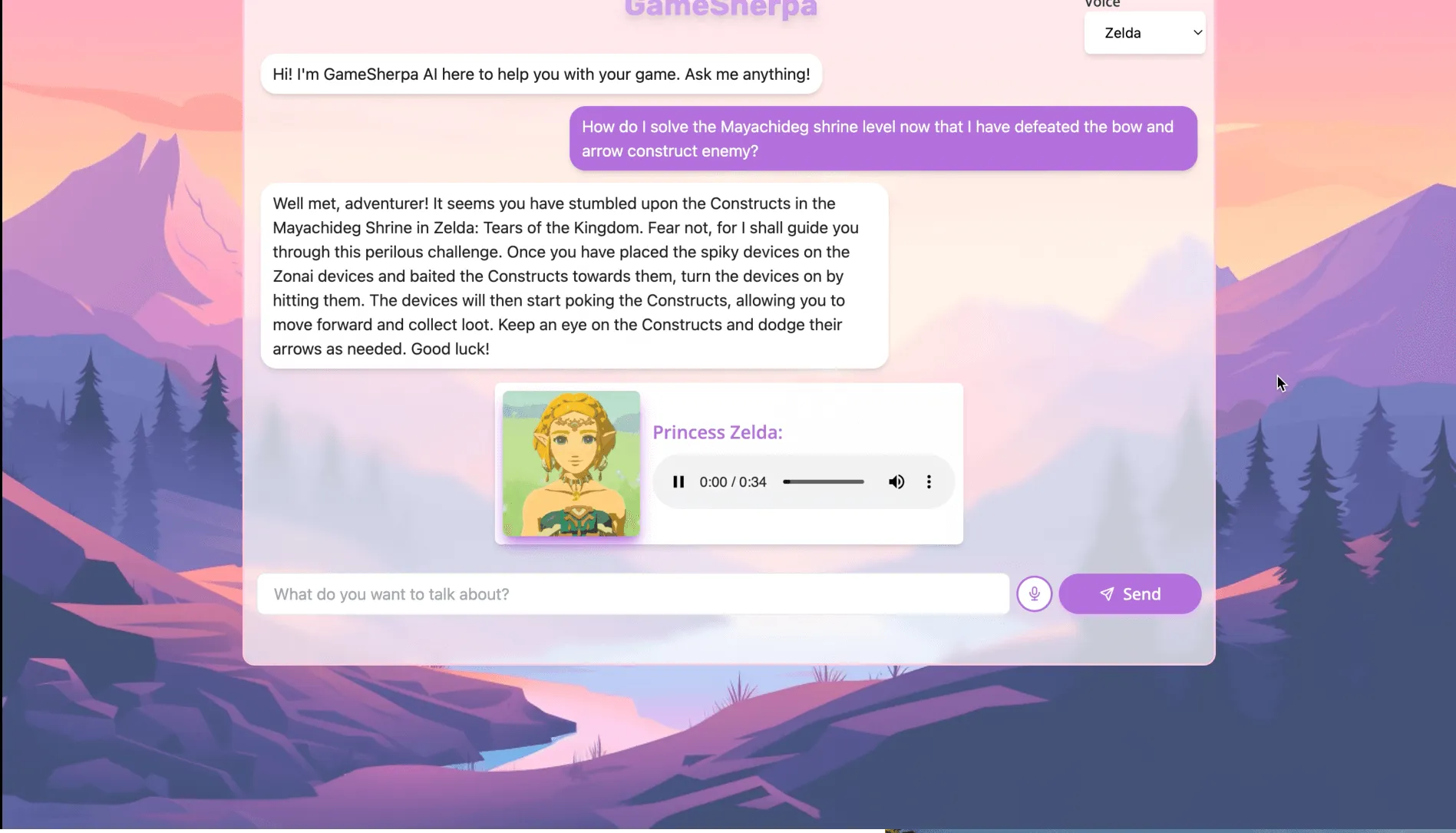

GameSherpa

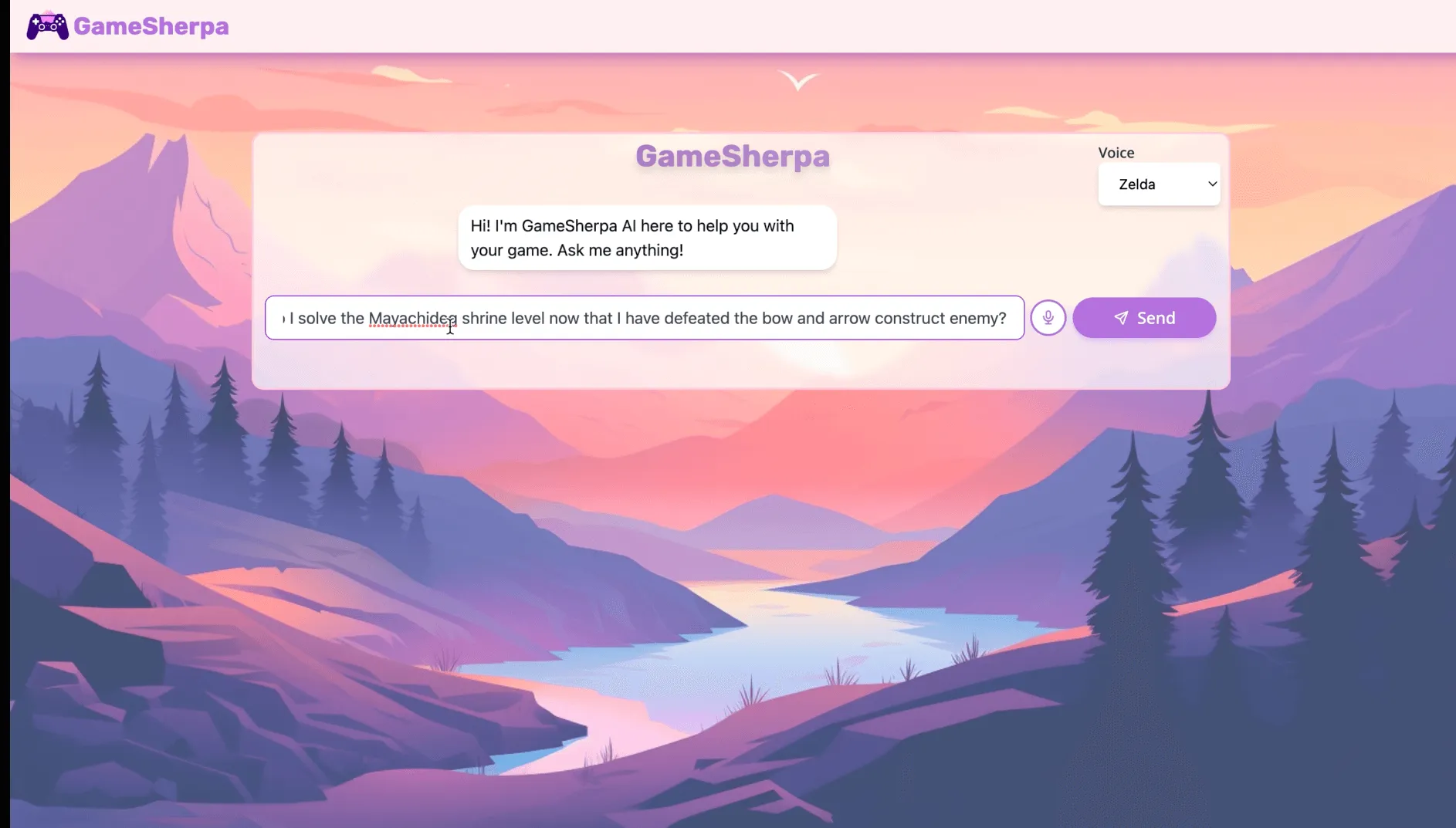

I built this project with my buddy Dylan at the AI Tinkerer summer Hackathon hosted by Madrona Venture Capital. I had this idea come to me while I was playing Zelda one day: "Why can't I ask an AI agent for help while gaming? What if this agent was friendly, and could speak back to me in the voice of a video game character? So that's what we set out to do! We only had six hours, but in that time I was able to spin up a frontend, and an AI agent on the backend that could search the internet for relevant data on the question you asked. It used OpenAI whisper to transcribe voice input, Langchain to power the agent, and Eleven Labs API to allow the responses to be spoken back to me in the voice of Princess Zelda. We had an ambition to include a multimodal AI that would be fed a screenshot of the game for additional context, but that proved a bridge too far in six hours.

We were third in our group of six teams, meaning we didn't quite qualify for the second round of pitches, but to be fair the other two teams deserved to win. What an experience to build such a cool app at a prominent VC on the 34th floor of a building downtown Seattle, with panoramic views of the Puget Sound. I'm so grateful for the opportunity to participate in this hackathon, and I hope to do more in the future!

Details

Tech Stack

Next.js

TypeScript

Tailwind

Langchain

Whisper

Eleven Labs

Features

- AI audio transcription

- AI agent with browsing capabilities

- Voice replication using Eleven labs API

- Beautiful functional UI (ok I might be tooting my own horn here, but come on! Just look at it!!

Learnings

- Eleven labs is scary good at duplicating voices

- Pitching a project at a VC took this hackathon to another level

- Next.js is still my baby. Easily the best React framework out there.

- OpenAI whisper is shockingly easy to use